Tl; dr

In today’s speech announcing the extension of India’s national lockdown till 3rd May, 2020 the country’s Prime Minister requested citizens to download the Aarogya Setu application. Unfortunately, the application remains a privacy minefield and it does not adhere to principles of minimisation, strict purpose limitation, transparency and accountability. IFF’s Working Paper, analyses the application, highlights how it is inconsistent with the right to privacy, is conceivably a risk toward a permanent system of mass surveillance, and suggests clear recommendations to arrest these risks.

Background

On April 13, 2020 IFF released its working paper called, “ Privacy Prescriptions for technology interventions on COVID-19 in India ”. A significant portion of this working paper is dedicated to the proliferation of experimental contact tracing applications not just in India but across the world. India has also launched its own contact tracing solution called Aarogya Setu .

IFF’s working paper was alert to this and Chapters 5-7 devote significant space in studying the emergent government and non-government practices, standard setting processes and first principles in the space. Chapter 7 of the paper studies three specific models of contact tracing including the Singapore Government’s Trace Together application, MIT’s Private Kit: Safe Paths initiative, and Aarogya Setu .

What is Contact Tracing?

According to the WHO, contact tracing occurs in three steps namely (a) contact identification; (b) contact listing; and (c) contact follow up. In particular, contact tracing is a pillar which helps public health officials in containing and the pace of transmission of the virus. This pace of transmission is measured by the unit R0 (R naught) which essentially connotes the number of people an infected person can spread the disease onto.

Contact tracing has traditionally been administered through the use of on ground personnel and volunteer armies. However, with the ubiquity of smartphones, which collect vast troves of personal information, governments across the world believe surveillance can aid with rapid contact tracing. But owing to the peculiarities of the coronavirus, authorities across the world are beginning to realise the limited efficacy associated with respect to location surveillance (both with cell tower data and with GPS signals).

As a result governments and other groups have either released or are developing smartphone apps that use Bluetooth beacons or GPS signals to log instances wherein a user’s device comes in contact with another user’s device. When a user of the app is detected to have Covid-19, contact tracing officers might be able to use the app to identify their close contacts in the prior days or weeks.

What’s the Risk?

With the creation of such systems, come new risks of institutionalisation of mass surveillance. Critically, India lacks a comprehensive data protection law, outdated surveillance and interception laws, or any meaningful proposals for meaningful reform. In domains like disaster relief, most apps which are purported as ‘contact tracing’ technologies, they often devolve into systems of movement control and lockdown enforcement.

A lot of technology solutions with no demonstrable scientific value to the national response can be passed off as being in the public interest. It leads to poor deployment of public resources and also makes it difficult for crisis responders to discern between “snake oil” and quality technology products. These risks are exacerbated in technology markets since there are no adequate checks and balances in development phases which ensure quality.

Such systems inadvertently discriminate against regions which have fewer concentrations of smartphones. Specifically, it can lead to harmful outcomes for people residing in economically weaker areas. In countries public health systems are already creaking under the looming threat of capacity deficits. If such systems wrongly urge people to pre-emptively take tests then there is a risk that public health systems may be overwhelmed prematurely.

It may also exacerbate the risks associated with the harvesting of personal data like health information, and also see the creation of new privacy invasive systems.

How to prevent these risks?

Such systems must consider embedding the following principles among others:

- The usage of these applications should be voluntary

- All data should always be stored locally on people’s devices

- This data must be encrypted

- No Government can access it at a later point, so must ensure nothing is centralised in a server

- Governments must appreciate that any data from location information, to proximity confirmations, to health status, to whether they have been placed in isolation is sensitive personal information.

- Privacy by design is more than just assurances that phone numbers are not recorded, all data is encrypted, pseudonymisation is deployed or that the use of the app is “voluntary” and based on consent. Most of these protections have known techniques of circumvention.

Therefore, we need to adhere to two principles:

- Strict limits (in terms of collection and duration )every step of the way; and

- Comprehensive evidence-based justifications every step of the way

Aarogya Setu compares unfavourably to Singapore and MIT

Consider the following aspects.

Is this for disease control or for other purposes?

In other countries, health authorities are leading the efforts to respond to COVID-19. For example, in Singapore only its health ministry can use these systems or have access to any limited data/interaction which is shared with them. European authorities are making similar commitments. They assure citizens that law enforcement personnel will not have access to these systems or the data therein.

In India, multiple committees have been set up in the context of Aarogya Setu or other technology responses to the coronavirus. But formal notifications nor press reports have any reference to major involvement of the Ministry of Health and Family Welfare. Instead health authorities are being tertiary institutional players.

To protect people’s right to privacy, countries (including Singapore) say that contact tracing will be used strictly for disease control and cannot be used to enforce lockdowns or quarantines. Aarogya Setu retains the flexibility to do just that, or to ensure comply legal orders and so on .

Minimisation? What Minimisation?

- Singapore monitors people’s interactions through Bluetooth beacons, MIT does it through GPS, and then there’s India which uses both .

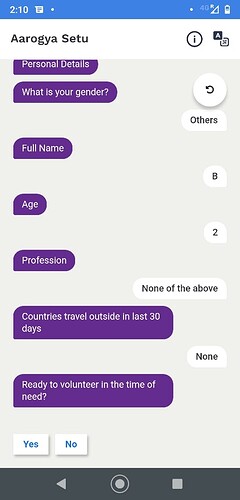

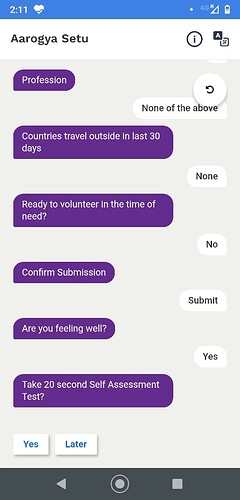

- Other apps just collect one data point which is subsequently replaced with a scrubbed device identifier. India’s Aarogya Setu collects multiple data points for personal and sensitive personal information which increases privacy risks.

No Transparency leads to discretion

The only information we have of the app is its frontend and its rather pedestrian Terms of Service (TOS) and Privacy Policy. Other projects release as much information as possible in pursuit of transparency. This may include an accompanying manifesto, technical specifications, general FAQs, and even its source code. Not only does Aarogya Setu go the opposite route but it also prohibits external good faith actors from reverse engineering the application for further scrutiny, information security and other related research which help facilitate stability.

Similarly, the app is capable of wrongfully identifying people as COVID-19 positive as admitted in the app’s Terms of Service. We ask in our report the question in our paper, does this mean people can get diagnosed as being infected with coronavirus based on an application rather than an actual medical test? Similarly, does this mean that a false positive entails that I cannot use public transport and go to work or leave the city to visit friends? This evokes imagery of the Chinese model of surveillance during COVID-19 and we have highlighted these risks as well in the report.

No Accountability and No Auditability

The Government has a blanket liability limitation clause inserted into its service agreements and privacy policies. This means citizens cannot hold the Government accountable or seek judicial remedy should they wish to ensure the Government’s processes are compliant with the right to privacy.

Second, the Government talks about certain obligations to delete certain personal data from its application after a 30 day time period. However, as expected this claim comes with enough exceptions which facilitate government discretion. This is compounded with the fact that both researchers and individual users cannot actually check if the Government has deleted people’s personal information and has no means of transparently auditing what the app is doing in the backend.

Risk of Permanence and Compromises to Autonomy | The Anonymisation Gambit

Finally, the app runs very palpable risks of either expanding in scope or becoming a permanent surveillance architecture. People are describing the current times as 9/11 on steroids. And with 9/11 we saw the creation and proliferation of mass interception capabilities for some governments. The Government has failed to provide any defined period by when it intends to review, delete and ultimately destroy its systems and data which is collected under the Aarogya Setu project. Second, there are already reports which confirm that this server is being linked with other government datasets. Such linking increases risks of permanent systems of mass surveillance.

Finally, the Government says that the TOS and Privacy Policy do not apply in any capacity to what falls under the category of anonymised data sets. The government cannot just say something is anonymised and aggregated so no longer personally identifiable without showing its citizens how it is ensuring this. This level of transparency is a minimum, since the vulnerability of anonymised datasets to people’s informational privacy and security is well documented in information security communities. Considering the risk of these anonymised data sets becoming permanent systems of data analysis, the Government has to keep itself accountable to public scrutiny.

Conclusion

IFF has addressed all of these challenges and many more when undertaking its case studies, and the paper eventually sets out a series of 17 recommendations to ensure people’s informational privacy are protected even in these uncertain times, wherein Indian authorities are viewing civil liberties as an afterthought.

Links to IFF’s COVID-19 Related Work

- Working paper “Privacy prescriptions for technology interventions around Covid-19 in India” dated April 13, 2020 ( Google Docs version / PDF Version )

- Representation to the Department for Telecom on ensuring connectivity and protecting net neutrality due to higher dependency on telecom networks ( link )

- Representation to the Ministry of Health to issue an advisory against the disclosure of the names of persons placed under quarantine ( link )

- Petition before the Supreme Court to restore 4G connectivity in Jammu and Kashmir to properly equip healthcare professional around Covid-19 ( link )